Assessment Design Principles: Building Better Evaluations for Online Learning

Most online courses fail at one critical point: assessment. Learners attend beautiful lessons, click through masterful slides, answer a few questions, and receive a certificate that says “Well done.” But did they actually learn anything? According to several studies, over 60% of learners feel that online assessments rarely reflect their real skills. . Source: Sciencedirect

That’s because many digital learning assessments plainly copy traditional exams into an online format. They test memory, not mastery. They evaluate recall, not reasoning. And worse, they often feel like a formality rather than an absorbed learning experience.

The good news is, this can definitely change.

In this blog, we will survey practical Assessment Design Principles that assist you move from “Did they pass?” to “Did they truly learn?”. You’ll learn:

- What makes a digital assessment enormously effective

- How to map fair, engaging, and application-based evaluations

- Real assessment formats you can start using right now

Let’s build online evaluations that really teach while they test.

What Are Assessment Design Principles?

Assessment Design Principles are structured guidelines that help you generate meaningful, accurate, and learner-friendly evaluations. They ensure that every question, scenario, or challenge has a purpose with clarity.

To be precise, if you can’t link a question to a learning goal, it does not belong in the assessment.

Why They Matter in Online Learning

- Online learning captivates diverse learners with different strengths.

- Conventional question types often fail to measure real-world competence.

- Constructive assessment builds trust between trainers and learners.

- Data-backed assessments also help leaders compute training ROI.

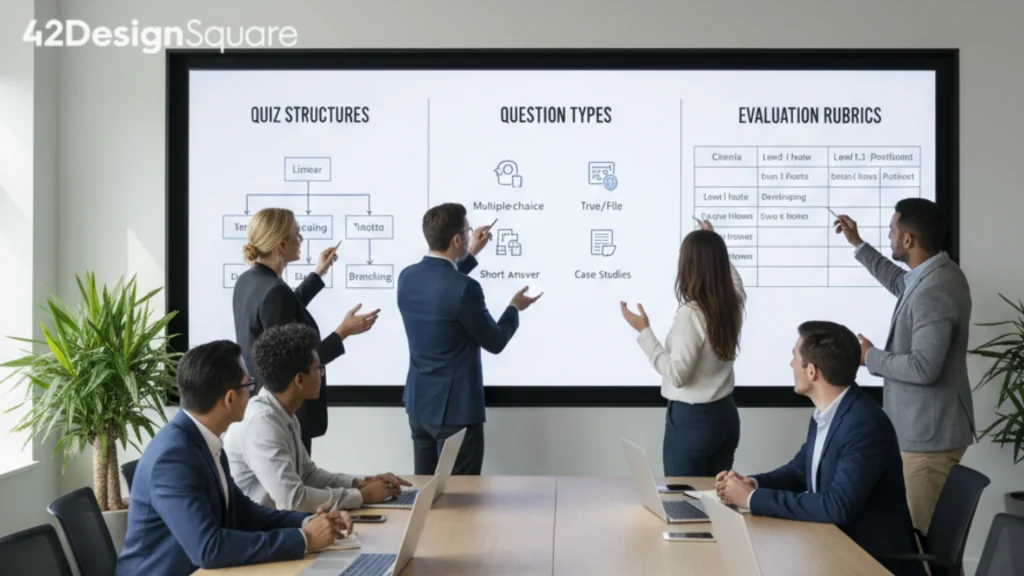

Core Assessment Design Principles for Digital Learning

1. Position with Learning Objectives

Every assessment item must attach to an objective.

| Learning Objective | Best Question Type |

| Identify a Concept | Multiple Choice |

| Apply a Process | Scenario Simulation |

| Demonstrate Skill | Video/Screen Task |

If you can’t trace the question back to an objective, delete it.

Assessment Design Principles

2. Balance Knowledge and Application

Circumvent questions like “What is X?”. Instead ask “What would you do if X happened?”

Example formats:

- Small branching scenarios

- Role-based decision trees

- Real-life dispute simulations

3. Use Multiple Question Formats

Different learners process diversely. Mix formats like:

- Drag-and-drop sorting

- Matching pairs

- Timed micro-challenges

- Audio or video responses

- Peer evaluation tasks

Platforms like Articulate Rise, LearnDash, 360Learning, and Moodle already aid many of these.

4. Be Transparent with Scoring

Confusion leads to frustration. Utilise rubrics or decision criteria.

Example Rubric (for Presentation Skill Assessment):

| Criteria | Excellent Work | Good | Needs Work |

| Clarity | Very Clear | Mostly Clear | Hard to Understand |

5. Approach Feedback as Part of Learning

Replace “Incorrect.” with:

- “Not quite, let’s think about this angle…”

- “You selected A. But in real-world cases, B is more secure because…”

Valuable feedback reinforces learning, even when the answer is wrong.

Synchronous vs Asynchronous Assessments: Which to Use?

| Scenario | Ideal Format | Tool Example |

| Sales Role practice | Live Assessment | Zoom + Scorecard |

| Knowledge Check | LMS Quiz | Moodle / Talent LMS |

| Critical Thinking | Branching Scenario | H5P / Rise |

digital learning assessments

Case Study: From Quiz to Real-World Assessment

A corporate sales training program saw high quiz scores but low sales performance.

42 Design Square redesigned the assessments utilising customer conversation simulations.

Results:

- Fewer learners passed right away

- But sales conversion upped by 40% over the next quarter

- Real competence replaced superficial recall

Quick Checklist Before Publishing an Assessment

Does every question link to a goal?

Does it test thinking, not guesswork?

Does feedback guide refinement?

Would this assessment actually work in the real world?

Conclusion

Assessments should not be custodians. They should be growth tools. When designed well, they not only calculate learning; they reinforce it.

At 42 Design Square , we help organizations turn passive learners into self-assured performers through interactive assessments, scenario-based testing, and flexible evaluations.

Want to build smarter online assessments?

Let’s design them jointly.

Contact us at 42 Design Square and transform your training outcomes.